RF-DETR (Region-Focused DETR), proposed in April 2025, is an advanced object detection architecture designed to overcome fundamental drawbacks of the original DETR (DEtection TRansformer). In this technical article, we explore RF-DETR's contributions, architecture, and how it compares with both DETR and the improved model D-FINE. We also provide experimental benchmarks and discuss its real-world applicability.

|

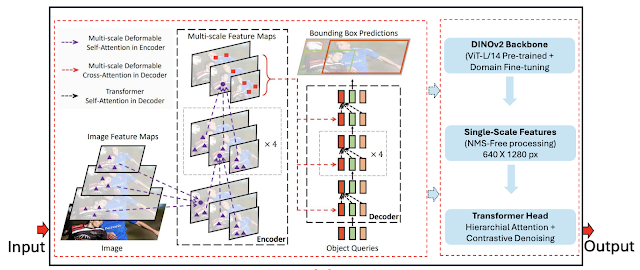

| RF-DETR Architecture diagram for object detection |

Limitations of DETR

DETR revolutionized object detection by leveraging the Transformer architecture, enabling end-to-end learning without anchor boxes or NMS (Non-Maximum Suppression). However, DETR has notable limitations:

- Slow convergence, requiring heavy data augmentation and long training schedules

- Degraded performance on low-resolution objects and complex scenes

- Lack of locality due to global self-attention mechanisms

Key Innovations in RF-DETR

RF-DETR introduces three key architectural improvements to address DETR’s weaknesses:

1. Region Proposal Encoder (RPE)

Whereas DETR initializes object queries randomly and lacks localization priors, RF-DETR constructs region tokens from dense CNN feature maps. These region tokens encapsulate both spatial and visual semantics, representing plausible object regions. This structured representation enhances the Transformer's ability to focus on local object information and improves training efficiency.

2. Token-to-Region Attention (TRA)

Standard Transformer self-attention considers all spatial tokens equally, which dilutes important local features. RF-DETR introduces a novel Token-to-Region Attention mechanism that explicitly connects each object query with its most relevant region tokens. This attention module improves the model’s precision by conditioning queries on semantically rich region proposals.

3. Region-aware Query Initialization

Unlike DETR’s learned query embeddings, RF-DETR initializes object queries using the region proposal tokens themselves. These initialization vectors carry spatial priors and appearance cues from the candidate regions, allowing the model to align with ground truth objects more quickly and leading to significantly faster convergence.

Each of these components independently contributes to performance gains, but in combination, they produce a synergistic effect that significantly enhances both accuracy and training efficiency.

RF-DETR Architecture Overview

The overall pipeline of RF-DETR is composed of four main stages:

- Backbone CNN extracts multi-scale visual features.

- Region Proposal Encoder (RPE) transforms these features into region-aware tokens using ROI pooling or learnable region groupings.

- Transformer Decoder accepts these region tokens as initialized object queries and processes them via Token-to-Region Attention (TRA).

- Prediction Head generates final classification and bounding box outputs.

This modular flow allows RF-DETR to seamlessly integrate region priors while maintaining the end-to-end learnability of the original DETR design.

Comparison with D-FINE

D-FINE, released in late 2024, introduced fine-grained cross-attention and multi-scale feature fusion for faster convergence and better accuracy. Key distinctions between D-FINE and RF-DETR include:

| Feature | DETR | D-FINE | RF-DETR |

|---|---|---|---|

| Convergence Speed | Slow | Faster | Much Faster |

| Locality Awareness | Minimal | Partial | Explicit region-based attention |

| Inference Speed | Slow | Moderate | Fast |

| Performance on Complex Scenes | Low | Improved | Excellent |

Comparison of Object Detection Models (COCO Benchmark and Real-World Dataset)

The table below compares the performance of major DETR-based object detectors: DETR, D-FINE, and the latest RF-DETR. Evaluation is based on COCO benchmark mAP, inference latency on T4 GPU, real-world accuracy using the RF100-VL dataset, and key architectural characteristics. RF-DETR demonstrates state-of-the-art performance across both efficiency and accuracy dimensions.

| Model | COCO mAP (@[.5:.95]) |

Inference Speed (ms/img, T4 GPU) |

RF100-VL mAP (Real-World) |

Key Features |

|---|---|---|---|---|

| DETR | ≈ 42.0 | Slow | - | Global attention only, slow convergence, lacks local context |

| D-FINE | ≈ 46.5 | Moderate | - | Multi-scale feature fusion, fine-grained interaction layers |

| RF-DETR | 60+ | 6.0 | 86.7 | Region-aware tokens, rapid convergence, real-time inference, region-focused attention |

Conclusion

RF-DETR effectively addresses DETR’s inherent limitations regarding convergence and locality. With its region-focused design, RF-DETR stands as a balanced and powerful detection solution that is well-suited for real-time applications and complex scene understanding. It demonstrates meaningful performance gains over both DETR and D-FINE, making it a compelling advancement in the Transformer-based object detection landscape.

Comments

Post a Comment